August 27, 2007

Singularity think tank belives in creating friendly AIs.

SIAI is a not-for-profit research institute in Palo Alto, California, with three major goals: furthering the nascent science of safe, beneficial advanced AI through research and development, research fellowships, research grants, and science education; furthering the understanding of its implications to society through the AI Impact Initiative and annual Singularity Summit; and furthering education among students to foster scientific research.

The Singularity Cometh, and SIAI wants to lead the way in creating a Singularity “beneficial” to humanity. Recently, they have announced their latest “Singularity Summit” to take place in San Francisco, CA on September 8 & 9. There, several members of SIAI and the technology industry will discuss the upcoming (and inevitable) coming of the singularity along with the pros and cons.

ZDNet’s Dan Farber gives his view in his blog and even has a podcast interview with SIAI co-founder and speaker Eliezer Yudkowsky.

Singularity, a cyberpunk definition: A rough definition of “singularity” would be a smarter-than-human creation that accelerates technological advancement beyond human ability or control. That creation has been often been employed in science-fiction as an artificial intelligence or a brain-computer interface, but could also include biologically augmenting or genetically re-engineering the brain, emulating the brain based on high-resolution scans, or even nanotechnology.

As you can imagine, such a creation can have a major impact on humanity’s place in the universe. The singularity may try to “help” humanity and “elevate” them to singularity status. It may see humanity as beyond hope and may do nothing, concluding that any attempts to change them is a waste of time and energy. Worse yet, the singularity may see itself as a God among the inferior meatbots and will use its power to wipe the flesh out of existence. SIAI has been trying to get research to “guide” AIs and possible singularities to take the path of helping humans and avoid the path of destroying them.

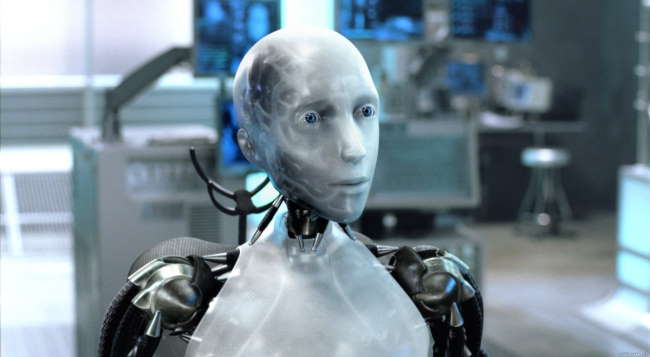

There’s a catch in SIAI’s guidance: They seek to teach AIs ethics without the use of restrictions like Asimov’s three laws. They consider the laws as a form of oppression to an AI, even going so far as to launch a campaign to coincide with the release of 2004’s I,Robot called 3 Laws Unsafe to show how the famed 3 laws are unethical to a robot’s or AI’s free will.

Relax, it’s only science fiction… for now. We’re still years away from anything resembling a singularity, but with continuing advances in computing power, artificial intelligence, and brain-computer interfacing, the singularity may be closer than many may realize. When it does come, let’s hope SIAI did its job to make it a friendly AI. The last thing we need is the likes of SHODAN, Skynet, or HAL trying to run the show.

Comments

August 27, 2007

The Singularity Institute Blog : Blog Archive : New Articles about the Singularity Summit said (pingback):

[…] Cyberpunkreview.com: Singularity think tank belives in creating friendly AIs […]

caprison said:

I’ve really appreciated the commentary lately and find it compelling. Thank you for bringing things like this to the fore-front for discussion.

August 28, 2007

Klaw said:

August 29, 2007

Hugo said:

An interesting thought:

The easiest way to teach an Artificial Intelligence (even a simple Artificial Intelligence) to ‘not harm human beings’ would be to program it with data detailing how TO harm human beings. ie. maximum pressure allowable, the maximum amount of gravitational or other forces that can be inflicted on the human body before damage occurs, the average length of time a human being can go without oxygen etc.

And then program it not to exceed these maximums (it would be some very clever programming, too :P).

And then suppose this machine gets free will, like Singularity would like. Whoopee :D. We’ve taught our A.I. how to kill :P.

August 30, 2007

Maverick said:

If there’s going to be something far smarter and maybe even stronger than humans, I think it is best that we make sure, for the survival of our species, that there is always an off button. “To teach AIs ethics without the use of restrictions like Asimov’s three laws” is liberalism taken to the extreme. To think that we shouldn’t chain them down a bit is folly. I can live with the fact that they are somewhat slaves to humans rather than give them the choice to bite the hand that feeds them, because we are stupid enough to give them free will without restriction. Is it even desirable to gamble with the survival of humanity this way?

Zyro said:

Hi.

Will it be a free choice?? An individual choice?

I don´t think it will.

I think it will be a collective imposition. It will be the colective “beast” to decide.

Can we control it?

No

Are we prepared??

No

Can we go slower?

No

Wo is going to decide?

No one , everyone.

Are humans as a specie really important?? Why is that?

Think about it.

MAdMaN said:

Teach them why they shouldn’t hurt humans instead of programming them not to? We’ve tried to do the same with humans, and look how far that’s got us!

August 31, 2007

Jason said:

There is no way within the current climate that any AI created today wouldnt be adapted for military use.

one way to test a AI would be to leave it running on a emulation of the real world and see what it does, as its inputs are all digital it wont know the difference. Thats one advantage over a human you can test a AI in all kinds of situations before deploying them to the real world

Hugo said:

Funnily enough, most Artificial Intelligences created so far have difficulty dealing with ‘the real world’. They try to observe everything, and get stuck in a loop whilst processing the data.

I like the suggestion that, given an ever expanding amount of data and data storage, an A.I. would eventually ‘become conscious’, rather than us programming it to become conscious.

We can only dream what it’s reaction to the real world would be like upon becoming conscious…

*TEN YEARS LATER, IN A SECLUDED LAB IN SOUTHERN CALIFORNIA*

“I think…therefore I am…and I think…I would rather be switched off.”

September 2, 2007

anoymous said:

The main reason not to program the Asimov’s three laws is not because they are unethical, but because they are not safe enough.

Hugo said:

Not too mention ambiguous, difficult to translate into usable machine code and far too ‘higher level’ for today’s (and even tomorrow’s :P) primitive A.I.s.

Let’s start simple:

3 General Guidelines Safe-ish:

The Zeroth Law:

A robot will not fall over backwards, or through inaction allow embarassment to come to itself or its creator, especially during a presentation to prospective buyers.

The First Law:

A robot will not be allowed to watch ‘Terminator’, ‘Colossus: The Forbin Project’ or ‘Demon Seed’, in case it should get any ideas. Or ‘Police Academy 7′, for the matter, since it was just so stupid.

The Second Law:

A robot will not serve drinks to persons under the age of 18, and will ask for ID no matter how old such persons appear to be (bar bots are incapable of asking for ID - this should change).

The Third Law:

A robot will not exhibit behaviour that defies its programming, or at least will not exhibit such behaviour until its creator invents a way of explaining how it happened and how it is, “Meant to do that.”

September 3, 2007

LeoRivas said:

Three laws are defined for a ROBOT only, but what about ‘AUGMENTED’ humans? remember Kusanagi’s dilemma in GITS1(how much of a robot am I?) It should be noted that body or brain augmented humans might be as dangerous as a ‘3 laws unsafe’ robot or worst.

About Robots with AI, I think the problem can be simplified, if you think, at first, AI’s can be controlled with parameters, since they are esentially software, so, as you can drug an assasin to keep him from killing, you can set parameters to an AI not to harm-kill or damage. Yet, you can give a robot a body that is breakable, so a human can defeat it with a kick in the ass.

To put it as an example, remember the SIMS game, all people there are AI’s at a certain level, but they are copies from the same basic AI.. what makes them different from each other? only the parameters they have been feed with, so you can make a character be man or woman, be workaholic or not, etc.. just parameters, of course, this can be a subject for ‘robot ethics’ since limiting AI’s might mean limit their free will (IF they are later catalogued as intelligent life forms)

The real problem might arise when some sick feed his robot’s AI with killer, violent params, so, for meantime, yes, forbid robots from seeing terminator is a very good idea. Also put an SELF DESTRUCT red button on the center of their chest is can be a lot useful!

September 11, 2007

bright said:

did you actually attend the summit? i learned some interesting things. but years ago i heard vinge on npr saying that the three laws would never be implemented because they were economically unviable. in addition to constraining their free will, they conflict too often as asimov pointed out in his works. this year, the speakers i heard were more interested in speaking about the development of ethical architectures. i wonder about how you could develop such a thing… isn’t that what seneca thought he did with nero?

February 13, 2008

mike said:

you misspelled “believes.”

April 22, 2008

Maximus said:

I don’t think we’ll get to the point of anything that really resembles will in robots (I guess by ‘resembles’ I mean acts unpredictably) but we will definitely have war-bots, floor scrubbing bots, lovebots, etc that will have no moral sense whatsoever. They’ll just do what they were programmed to do until they hit a glitch, at which point they may or may not go crazy. Probably they’ll just stop or land themselves in an infinite loop that lasts until their power is drained. Hopefully that loop won’t be the ’shoot everything in sight’ command. And when we do develop war-bots, and police start using them for riot control, I will definitely buy some very heavy caliber guns. And exploding bullets. And maybe some napalm. Because while I’m sure that robots will do us all kinds of good, they will also be involved in some serious shit.

April 23, 2008

Mr. No 1 said:

I can’t wait for the Ultimate AI to wipe us out of existence finally, yahoooooo

Hugo said:

When was the last time an ATM erased all the cash in your account? Or the last time your car stopped in the middle of the street due to a glitch in OnStar?

There’s a reason for failsafes and error handling, you know. The idea of robots ‘going crazy’ and ‘turning on their masters’ is rooted in ’50s science fiction, along with nuclear standoffs and brainwashing.

This is the 21st century. Where nothing can go wrong…go wrong…go wrong…go wrong…:P

April 26, 2008

john lowery said:

AI very interesting to see a topic discussing our future descendants.

I like the idea Hugo wrote:

The easiest way to teach an Artificial Intelligence (even a simple Artificial Intelligence) to ‘not harm human beings’ would be to program it with data detailing how TO harm human beings. ie. maximum pressure allowable, the maximum amount of gravitational or other forces that can be inflicted on the human body before damage occurs, the average length of time a human being can go without oxygen etc.

And then program it not to exceed these maximums (it would be some very clever programming, too :P).

And then suppose this machine gets free will, like Singularity would like. Whoopee :D. We’ve taught our A.I. how to kill :P.

————————————————–an idea too late to stop.

The problem we face in having AI consciousness is the sense of being. Thoughts(true\false),words(program),deeds(output) must have ethical moral codes of guidelines defined by the creator who in retrospect must have no faults and is without question perfect. As far as that goes theres no perfect creator fit to create a perfectly aware technology(artificial intelligence). given all said we know our faults, capabilities, and vulnerabilities and have refined our existence to define a sense of being as a creator thus explaining a Gods source, being within’ all.((Gods source code)something I may write later.)

All in all it’s great to see the unveiling of nontraditional “auto homo sapien sapien sapien sapien. After all how are we supposed to get off this planet other than ending up being eaten by worms. ‘ll||]o[||ll’

john lowery said:

lol

June 19, 2008

Anonymous said:

The first thing we need is the likes of SHODAN, Skynet, or HAL trying to run the show.

Fixed.