February 2, 2009

Do Humanlike Machines Deserve Human Rights?

Source: Wired

A burning question. Wired’s Daniel Roth asks the important question of what rights robot should have when they reach human levels of sentience. Something to get the philosophers, religious fruitcakes, and robot-rights activists to talk about:

This question is starting to get debated by robot designers and toymakers. With advanced robotics becoming cheaper and more commonplace, the challenge isn’t how we learn to accept robots—but whether we should care when they’re mistreated. And if we start caring about robot ethics, might we then go one insane step further and grant them rights?

Apparently Mr. Roth has already sided with the pro-human forces, mainly because of his dislike for the animatronic Elmo dolls, and a little kool-aid from Fisher-Price’s marketing Veep Gina Sirard:

Of course, that’s what corporations, governments, slave owners, and dictators have been saying about people for centuries. They’re only toys now because the technology has not progressed to the point where robotic humanity is possible… but once it does…

Given events in places like Auschwitz, the former Yugoslavia, Guantanamo, and the World Trade Center, I often wonder if humans deserve human rights. Maybe some competition from the machines may snap the species out of narcissistic slumber. Right now is the best time to recognize robot rights… otherwise…

“It sits there looking at me, and I don’t know what it is. This case has dealt with metaphysics, with questions best left to saints and philosophers. I am neither competent, nor qualified, to answer those. I’ve got to make a ruling – to try to speak to the future. Is Data a machine? Yes. Is he the property of Starfleet? No. We’ve all been dancing around the basic issue: does Data have a soul? I don’t know that he has. I don’t know that I have! But I have got to give him the freedom to explore that question himself. It is the ruling of this court that Lieutenant Commander Data has the freedom to choose.”

- Captain Phillipa Louvois (Star Trek: The Next Generation “The Measure of a Man”)

Comments

February 2, 2009

Klaw said:

Since when did Elmo represent sentience? That’s a huge uncanny valley you are expecting me to Evel Knievel jump there sir, especially one revered in the furrie communities.

Illogic said:

Maybe the robots can help us decide what makes humans human?

I think we need to figure that out, or at least find some sort of general principles to base it on, before we can decide if a robot (android?) is equally human in it’s own sense.

Mr No 1 said:

“I often wonder if humans deserve human rights” - Ah, man, every day I hope to see that eagerly awaited mushroom cloud in the horizon. It’s not a matter of deserving but of freeing ourselves and the rest of the universe of this plague (I’ve always felt identified with agent smith).

Of course, I don’t believe nuclear holocaust would finish us off but it’d be a nice view.

KBlack said:

“Right now is the best time to recognize robot rights”

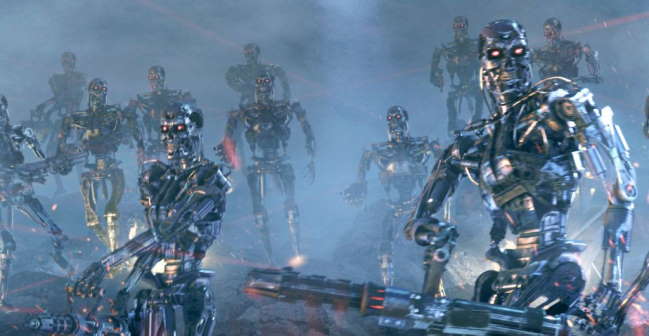

Really? Even if we don’t know what a sentient robot is going to be capable of/ “feel” like, etc? Isn’t it a little early? I mean, I LOVE robots. They’re the reason I go to school every day. But granting rights to something that isn’t even proven to be possible, is a little risky to me. I don’t really believe in any terminator-style war happening, but such pre-emptive laws could easily be abused by humans, or even by robots when (and if) they become aware of them.

Think of it, let’s say you build/buy a sentient robot. It breaks, but the machine “refuses treatment”. You’re now stuck with a useless pile of metal where you had a state-of-the-art robot which could be used in many ways, just because he didn’t want to be repaired.

I know that’s silly, but I couldn’t come up with another examples. We just have NO idea what a robotic life form will look like, nor how it will interact with us. We can’t grant them rights just yet, but thinking about and following closely is a good idea.

Immortal_Peregrin said:

I think having another sentient creature on the scene, synthetic or not, would further our understanding what makes us human.

Mr. Roboto said:

It looks like the robot rights war is already on. There’s a report from California Polytech about the ethics of autonomous military robot usage. You can grab the PDF here.

Again, Wired weighs in on the issue. They also have a link to the report.

February 3, 2009

Kovacs said:

This is a joke. Too many humans out there have no basic rights. And what about animals? Pets have more rights than the homeless.

All this is good for is an abstract exercise in order for us to look at ourselves better.

Immortal_Peregrin said:

I suppose the question is when does a robot cross the line between sentience and programming? Is there even a difference? I think it could be argued that humans are the product of our genetic programming. Does our sentience arise from our programming?

February 4, 2009

Stormtrooper of Death said:

Case: A sentient robot walks the streets, and sees a child almost run over by a car. The sentient robot jumps in front of the car, gets severely damaged and is too damaged to continue working…

But , it was a sentient robot..

now, when it was just a human who did save the kid, that human would become an instant hero.

but what about that sentient robot, that gave up his robotic life to safe a kids human life ? Wouldnt it be fair to grant that damaged robot hero status ?

The kid survived, no matter if it was rescued by a human or a robot, so, then i might presume, the robot would also be granted a hero status and would maybe be repaired again and given more rights, as the sentient robot did save a human life, even if the sentient robot was not needed to do so…

aikeru said:

A robot is simply a machine.

Does your can opener deserve rights? Does your laptop computer deserve rights? Does your cellphone deserve rights? It’s ignorant and naive to think that robots will ever achieve sentience.

Break an elaborate illusion of human like robot into its basic parts and the illusion falls apart. Too much sci-fi/hollywood.

I’m a professional software developer. A computer is a machine that follows a list of instructions, called programs. Add two values, display the color blue, eject the CD tray — this is not intelligence. A computer is programmed to respond to input. When you press a key, there is programming to display the corresponding letter on the screen.

It’s no different when responding to other user input, be it facial/voice recognition or running an algorithm against an optical device to determine that one object will come into contact with another object in motion, and that the first object was determined to be a human (while no significance or understanding is attached to what a “human” is) and the second object is a car (again, the same).

No, a robot could never earn “hero” status. The rest that follows are simply more 1’s and 0’s. The computer on your desk is not in any way magical, though it might seem so to someone uneducated or ignorant.

It’s the same with robots. Sorry, go back to your dreams or live in ignorance.

I hope this helps clarify.

micyia said:

Robots will definately become equal and then superior to humans in all aspects. We are pretty far off from creating such a machine but eventually it will happen.

Lets assume that an alien scientist visisted earth three billion years ago when the only lifeforms on earth were bacteria. If he used the same logic as akieriu he would have come to the conclusion that the lifeforms, that we have evolved from, are tiny organisms that follow some instructions.

The scientist would have come to the conlusion that the bacteria aren’t a scentient being. Three billion years later and some of those bacterias have evolved to humans.

The question isn’t if we are going to eventually built robots that think (or process information) like us but if we would go one step further and create a form of evolution for robots aswell.

As for the example with the robot saving a kid from a car then a robot could be considered a hero only if that machine has a survival instict that warns it about the danger involved in saving the kid but the robot chooses for whatever reason to ingore that programming for whatever reason save the kid.

A robot with no knowledge of its existance and the consequences of its action of saving a kid from a car should not be considered a hero.

Anyway we have some much more serious issues to deal with as humans for the time being. We should start worrying about giving rights to machines when the time comes and that time won’t be in our lifetime anyway so let future generations worry about that.

Sorry for my English.

February 5, 2009

Mr No 1 said:

aikeru, talking like that is a bit over the top. You dont know the future and leaving your word as an axiom only because you work with software is… … well, not right. Apart from that, you simply deny any possibility and decide not to give your opinion about the “if”.

But then again, I dont care much, Im just a bit pissed off today =)

BTW, I do not think we humans will create the ultimate thinking machine/sentient robot, but I do reckon IT will exist.

February 8, 2009

Nickodemus said:

Do Humanlike Machines Deserve Human Rights?

The moment I read this I instantly thought about a conversation between a man named Daniel and woman named Elaine. For any curious minds check out “If they give you lined paper write sideways” by Daniel Quinn pages 82 onward. Tell me what you think and it relates to this question.

The way I see it, whether they “deserve it” will not mean much. It will be down to the one(s) who decide whether it should be a law. Even if it was a law, it does not mean they would always receive it. Much like humans and “their” rights.

I could on and on but I’d really like to hear someone else’s opinion after reading the conversation.

Nickodemus said:

Sorry about the grammar mistakes

j said:

this is flawed, i think. what if the robot didn’t look ‘human’ at all. would you care about the rights of a large square-shaped robot as opposed to a human-looking robot? at this point you might start pointing out the companion cube from portal, i guess, but that’s more about sentimentality than rights. seems to me it’s sort of like how peta wants all ‘animals’ to have rights, but really they seem to only care about ‘mammals.’

in any case, we’re nowhere near sentient robots. maybe we can make programs that can “think” to the extent that it has essentially a look-up table for proper responses, but it’s not processing the meaning of anything. it’s just spitting out what has been input into it before, theres nothing remotely creative about it. the best we’ll be able to make are robots that can ‘learn’ repetitive motions, so you might have them be able to adapt to varying environments in space, say. robots for research, but not as human replacements. and the term ‘meatbot’ is idiotic.

February 9, 2009

Diligent Ape said:

Captain Phillipa Louvois is right I think. It’s basically a phenomenological question; is there anything “in there” that registers the emotions? Is there a “ghost in the shell”? We have no way of ascertaining whether it’s the case.

There are theories which state that even humans start out being soulless, first retaining a consciousness when their brain and/or language complexity is developed to a certain state. Does that mean that babies shouldn’t have human rights then? No, of course not.

I say let’s play it safe If we make these creations, we should take heed to the Frankenstein myth and treat our children/creations with a fair amount of dignity. Yes, it might seem impractical and maybe even superstitious, but seriously, I don’t want to have to flee from neither Cylons nor Terminators. And personally, I’d feel good treating my robot assistant with dignity, I’d feel like I had company in stead of being alone *sob*.

If I were Christian, I’d argue that robots do not need rights because they have no soul, as opposed to humans. But I’m not a Christian and my sentiments are that consciousness can be created synthetically (but that’s just [i]my[/i] belief).

That being said, some might say it’s a bit early to even be discussing these things. I disagree; we need to know where we stand before it’s too late. Besides, it would be awesome to have a UN declaration of robot/android rights.

February 10, 2009

wietrzny said:

Nowdays we cannot find if robot have “soul”, consciousness or anything else. We cannot even tell why we state that this girl next to us is conscious, nor this guy who deliver pizza!

What we know about them?

They’re just another black box - we can see how they behave, what they try to communicate but that’s all. We believe they are conscious, therefore the has some rights. Or we believe they are God’s children so they have soul…

This is already a bet. Just a bet!

So if we treat our neighbors “right” and they are “human” we don’t violate their rights. What if they are not equal to us? Well it’s better not to hurt even if we are too generous, “too human” than to make a mistake and hurt some human.

It was too difficult to grant such rights to animals - we have two (or three) arguments:

1) We cannot talk to them so we cannot be sure if they are conscious.

2) They are just animals, not God’s children therefore they have no soul (such as woman for some Muslims, or Afroamerican or Jews or any other race or society for someone)

3) It’s usefull for us to have something similar to us but different. Different in that way that “it” has no rights. It’s just a food, just a mean to test new drug etc.

Nowadays we’re attempting to create a “thing” that’s certainly a thing but can communicate and think. This is necessary for us to fight with the first reason. We can ask “it” we can communicate with “it”.

Of course it’s a thing therefore is not a beloved God’s man, “it” has no soul. However We as the God create it’s mind and we’ll turn it on - we’ll give “it” life.

There is also third statement: things, animals, womens or any other slave are just useful for us. Therefore we cannot give them right because this is economical or emotional or social sucide! I’m afraid this is the only reason for our special position and bigger rights.

IMHO we can give rights to any “blackbox” that may appear conscious, any “blacbox” that might feel pain or pleasure. Just in case it really posses this qualities. Thats the logic.

There’s also question: should we rewrite our laws in a way that allow any kind of “blackbox” present or future to ask for its rights? That’s ethic.

Who is a “blackbox” well… probably I’m blackbox for You and You’re blackbox for me - we cannot share our minds we have to communicate.

Diligent Ape said:

I agree with Wietrzny, it would be impossible to grant robots human rights because they’re supposed to be our tools. If they were to have human rights, then they would have a lot of rights that would prove impractical for us.

I think the answer would be, maybe, to give them robot rights. Because they probably will be very different from humans, they won’t require the same things as we. If they have no pleasure-pain feedback system for instance, some of the human rights simply would not apply.

One thing is whether there is a consciousness to experience things, another is what the creation might feel to begin with. Its no impossible to imagine a machine that actually felt pleasure when engaged in backbreaking labor or felt pain when idling.

I don’t think the answer would be human rights, but rather specific rights that fitted the creation better. Still, this may be a slippery slope because it sometimes was used as argument not to give slaves human rights. They were so “obviously” different from the ones the rights were made for to begin with, hard work, it was said, was probably good for them and to much leisure would end up in drinking and worse.

I definitely feel that organisms that are able to feign consciousness should have some sort of rights. But what the nature of these rights should be, is a hard nut to crack.

February 23, 2009

LockeJV said:

What happened to the guy that was arrested for holding up the protest sign? There was only two entries in his blog, the last of which stated his court date and that the ACLU was going to help him.

LockeJV said:

The case was dropped - from the ACLUPA site:

(Berks Co.) In April 2007, Charles Kline displayed a sign that read, “Equal Rights for Robots,” during a confrontation between pro- and anti-gay demonstrators on a public university campus. He was arrested and

charged with disorderly conduct at the direction of campus security.

The ACLU-PA provided representation and in July 2007 the District Attorney dropped the charges at the preliminary hearing. Kline

v. Kutztown University, Berks Co. Dist. Mag.; Willis; Roper

March 17, 2009

Mike said:

Love your site, Does anyone knows something about this?:

The spanish cyberpunk film Sleep Dealer supposdly got some inspiration from the cool mexican shortfilm Omega Shell. I seen it and is really interesting, defently mexican cyberpunk.