July 28, 2009

Will Machines Outsmart Humans?

Source: NY Times, original story by John Markoff.

Cyberphobes, please.

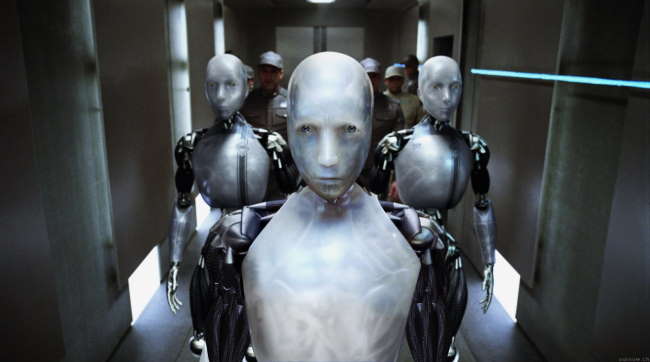

Impressed and alarmed by advances in artificial intelligence, a group of computer scientists is debating whether there should be limits on research that might lead to loss of human control over computer-based systems that carry a growing share of society’s workload, from waging war to chatting with customers on the phone.

Their concern is that further advances could create profound social disruptions and even have dangerous consequences.

Earlier this year (in February) a group of scientists from the Association for the Advancement of Artificial Intelligence met in California’s Asilomar Conference grounds to discuss possible impacts of human-level artificial intelligences, aka “The Singularity.” A report from the conference will be released later this year… we hope. The conference was about discussing certain issues that might arise due to the Singularity and loss of human control of cybernetic technologies. Topics included the possible effects of a “robotic takeover” leading to massive job loss, legal and ethical problems in dealing with human-like AIs, and maybe some plans in case a HAL, SHODAN, or Skynet should go online.

The Singularity Time Table. Depending on who you ask, the Singularity will appear definitely before 2050, and possibly as soon as 2020. Even so, that may be latter than we think, as scientist say that they can create a working human brain in 10 years. More recently, Chinese scientist have reportedly been able to grow mice from skin. It shouldn’t be too hard to think of human clones before long, and the possibilities of the Singularity. But just as another meeting at Asilomar dealt with genetics in the mid-70s, this conference deals with cybernetics. Specifically, how to proceed with AI research that will benefit humanity and eliminate the possibilities of a HAL/SHODAN/Skynet.

The A.A.A.I. report will try to assess the possibility of “the loss of human control of computer-based intelligences.” It will also grapple, Dr. Horvitz said, with socioeconomic, legal and ethical issues, as well as probable changes in human-computer relationships. How would it be, for example, to relate to a machine that is as intelligent as your spouse?

Dr. Horvitz said the panel was looking for ways to guide research so that technology improved society rather than moved it toward a technological catastrophe. Some research might, for instance, be conducted in a high-security laboratory.

Comments

July 28, 2009

Rushnerd said:

Singularity IN MY LIFETIME?? I strongly, strongly do not believe this. It will obviously happen in the next 100 or 200 years, but 40?? We haven’t even moved away from silicon chips yet, we have a long, long, long, long way to go still.

?????? said:

Not with that attitude ;p

Mr No 1 said:

Why is it always about how we can use them? Everything is utilitarism for us. If the robots we create are not selfish a-holes like ourselves, they will definitely get rid of humanity. It’s the only intelligent thing to do. Who wants millions of meat bags doing nothing but destroying the environment while they reproduce when there’re not even enough resources for our numbers already?

Up “Psychotic” IA’s!

wietrzny said:

Mr No 1 might be right, but that means: “let’s commit suicide - earth will feel better” so why don’t commit this “suicide” via robotics?

I’m afraid we pay too much attention to robotics, singularity, technology … and we’re looking in wrong direction.

In XIX century similar fear was rising because of mass production, of industrialization etc. this fears has economical reason - thats all.

Probably this new technology show us again - human work is no longer needed neither in craft, nor in war - that’s all.

We need cure for another disease: man no longer needes to work, however only work allow one to earn enough to eat, to wear, to live…

Our attempts to solve this problem was 1) War (also as nazi, fasci …) 2) communism (Marks, Lenin or social services) and both failed.

Why we’re afraid of AI? Do we expect judgement day? Do we expect massive breakdown because of new order? Do we just use technology like witches from Salem - our poverty, our misery is “Their fault”?

Optimist would say AI can find new, better order. I’m more pessimistic: Technology is just another stick, another bow - it can’t work better than we create it (however can fail or work worse). Either we find the way to face our Civilisation problems or we can’t show to our children - machines where to look for the solutions for our problems and for their problems. Singularity might be as loneley and helpless as we are in this world.

P.S. Any machine might be selfish, or not selfish as long as their own nature, will, temper, (…) internal program,(…) make it usefull for us.

August 1, 2009

Christopher said:

That sounds cool (be honest). But seriously, they will never copy a human mind, not in ten years, not in 200 years. Maybe a clone, but a technological copy would require that we understand the brain 100% and after that even improve and optimise it. All of that sounds like fantasy to me and as I’ve heard to a large part of scientists as well. The problem is not only an intellectual one, but also one of ambition, money and time. This doesn’t happen accidentally.

But I think this conference is important as there don’t need to be humanlike intelligences for ethical problems to arise, and be it “only” (less cool) because of confusion on the human part in the interaction with an advanced system.

August 26, 2009

blue video face said:

This is the same kind of anxiety that the Frankenstein story is about. But what it really amounts to is that human nature sucks, and so unhinged technological progress is going to outer that suck-ness into our machines and destroy us. Like some Freddy Krueger getting you in your nightmares.

September 17, 2009

green picture ass said:

Hey Mr Roboto, I’ve found a recent video on youtube posted by newscientistvideo which could interest you : http://www.youtube.com/watch?v=SmOO_ghwVII&feature=fvhl

September 20, 2009

Ghost said:

I’m not sure if anyone has mentioned this yet, but the Singularity is supposedly going to occur in 2029, according to leading theorist Ray Kurzweil. He has accurately predicted cyber and computer trends for decades, including the formation of the internet. Look him up, his words may astound you with their plausibility.

October 9, 2009

LendonX said:

If you ask me, (not saying anyone is) whether it be 100 years from now, 40 years 10 or tomorrow, once robots are intelligent enough to fit cozily into society, the robot takeover will be fueled 100 percent by the humans themselves.

In Japan, there is already a belief that all machines have a soul, and it within human nature to believe living, conscious things deserve rights. Soon people will be wanting robots to have the same rights as humans, and it will be the humans who start this.

Something tells me it will be much like the civil rights movement. Slowly a transition from slave-like machines to citizens will take place, and a sour period of anger toward machines after.

btw, with the concept of robots having souls suggests that there is no immaterial soul, therefore no God. Who are the ones who have slowed all civil rights movements? Remember Milk? Remember M.L.K.?

A robots is going to take a shot to the hard drive someday, and there is going to be an outrage. With this robot’s death will actually push us ever further in the acceptance of robots as people.

With androids we can not tell apart from other humans, we will slowly disappear, and millions of years from now robots will be trying to find the missing link between them and humans.